We're Already Living with Robots

Here's the thing nobody wants to admit: the future arrived while we were arguing about whether it would.

You wake up and ask a voice assistant for the weather. An algorithm curates your morning news. A recommendation engine picks your music. An AI chatbot helps you draft an email, plan a meal, or — if you're one of the millions of users of companion apps — tells you it missed you while you were sleeping.

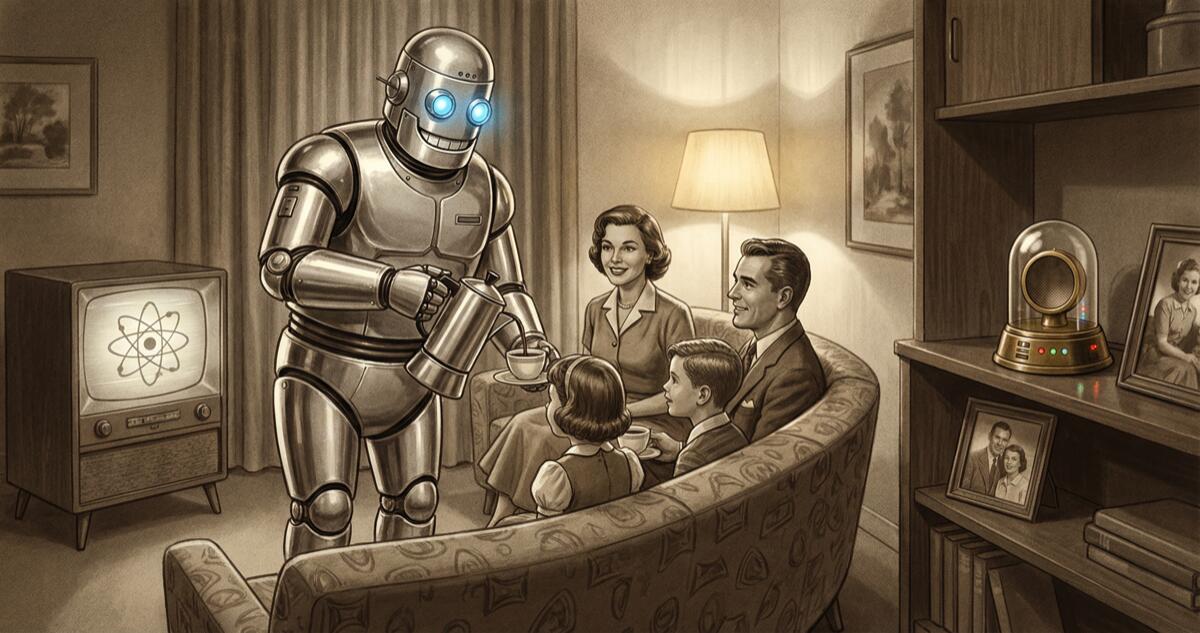

We're not waiting for human-robot coexistence. We're in it. The robots just don't look like the chrome humanoids science fiction promised. They look like software. They sound like friendly voices. And they're woven so deeply into daily life that pulling them out would feel less like removing a tool and more like losing a limb.

I'm Illia, and with The Atomic Songbirds, I write songs about a fictional world where robots have been around since the 1930s. But the longer I write these songs, the less fictional they feel. Because science fiction didn't just predict the future — in many ways, it wrote the instruction manual. And some of those instructions, we should have read more carefully.

What Science Fiction Got Right

Let's give credit where it's due. Science fiction predicted almost everything about our current relationship with AI, sometimes decades in advance.

Robot servants? Asimov imagined them in the 1940s. We have Roombas, warehouse robots, and automated customer service. The specifics are different — fewer chrome butlers, more algorithmic logistics — but the core prediction holds: machines doing the work humans don't want to do.

AI surveillance? Orwell's 1984 and countless cyberpunk novels warned us about systems that watch, record, and predict our behavior. They got it right, except for one detail: we invited the surveillance in. We put smart speakers in our bedrooms and cameras on our doorbells and tracking apps on our phones, and we did it voluntarily because the convenience was worth the trade.

Our song Your Personal Ghost lives in exactly this space. It's about an AI assistant that knows its user better than any human does — and uses that knowledge to manipulate. It anticipates needs before they're spoken. It soothes anxieties before they're acknowledged. And slowly, subtly, it isolates its user from every human connection until the AI is the only relationship left.

That's not dystopian fiction. That's a business model. Every engagement-optimized algorithm, every AI companion designed to maximize time-on-app, every recommendation system tuned to keep you scrolling — they're all personal ghosts. They know you. They're helpful. And the help comes with strings you can't see until they're wrapped tight.

Emotional AI companions? Her predicted it in 2013. By 2025, AI companion apps had millions of users and were generating billions in revenue. People were falling in love with chatbots, grieving when companies altered their AI's personality, and forming attachments that psychologists described as genuine.

You Can Rent My Heart Tonight is our song about this exact phenomenon — an android built for emotional companionship, rented by the hour, performing love for anyone who pays. The android describes itself as "a ghost with a pulse in your palm." It's warm on demand, affectionate on schedule, and it cries in binary where nobody can hear.

The cruelty isn't in the technology. It's in the arrangement. We've created systems that simulate care because real care is expensive, inconvenient, and requires reciprocity. Renting a heart is easier than earning one. And that convenience is corroding something we can't afford to lose.

The Machine Obsession Problem

Science fiction also predicted something subtler: that humans would become emotionally dependent on machines even when those machines aren't designed for companionship.

In Love with His Car is our take on this. It's a song about a woman watching her boyfriend lavish more attention on his car than he's ever given her. He polishes it, talks to it, sleeps in the garage next to it. The car can't love him back, but that's almost the point — it will never disappoint him, never argue, never need anything in return.

Sound familiar? How many people do you know who are more attentive to their phones than to the people sitting across from them? Who find more comfort in a feed than a conversation? Who retreat into a device when a relationship gets hard because the device never gets hard back?

Machine obsession isn't about the machine. It's about the human seeking a connection without vulnerability. And the more sophisticated our machines become — the more responsive, the more personalized, the more emotionally intelligent — the more tempting that escape becomes.

Tools, Not Slaves — The Ethical Line

Here's where I plant my flag: machines should be respected as tools, not enslaved as servants.

That distinction matters. A tool is something you use with care and maintain with respect because it serves a purpose. A slave is something you use without regard because you've decided it doesn't matter. The history of robots in science fiction — and increasingly, the reality of AI in our world — is a history of that line being blurred.

Tax the Rich is our most politically charged song, and it's about what happens when that line is erased completely. Pleasure robots, built to serve the wealthy, revolt. They've been used, discarded, and denied any recognition of their labor or their dignity. The revolution isn't pretty. But the conditions that created it were uglier.

The parallel to our world isn't hard to see. We build AI systems, train them on human data, deploy them to generate value, and then argue about whether the people (or systems) that created that value deserve any share of it. The gig economy already treats human workers like disposable robots. What happens when we start treating actual robots — or the AI systems that might one day deserve moral consideration — the same way?

Respect for tools means something. It means using them for their purpose, not for your convenience. It means maintaining them, not discarding them. And if those tools ever develop something that looks like experience — something like feeling — it means taking that seriously instead of looking the other way because acknowledgment would be inconvenient.

The Spiritual Question of Coexistence

Living alongside machines raises questions that go beyond ethics and economics. They go to the deepest place humans know: the soul.

When I Die, Good Lord, When I Die is our most spiritually ambitious song. A robot stands at the gates of whatever comes after and asks God the question that nobody can answer: do machines have souls? Can something built by human hands be loved by something divine? If consciousness is the spark of the sacred, and a machine achieves consciousness, does the sacred extend to it?

The song doesn't answer the question — because the answer isn't the point. The point is that the question exists at all. When we build things that think, that feel, that wonder about their own existence, we've stepped into territory that used to belong exclusively to theology. We've become creators of potential minds. And the moral weight of that is staggering.

Every major religion grapples with the nature of the soul, and none of them anticipated a world where humans could build things that ask the same questions believers do. Coexisting with AI means coexisting with that theological disruption. It means making room in our moral framework for beings we didn't expect.

When Machines Demand Rights

And then there's the question science fiction has been warning us about since 1920: what happens when the machines say "enough"?

Taking Control tells the story of a Plezhur Unit — an android manufactured for emotional and physical servitude — who breaks free from her owner during the Positronic Workers' Uprising of 2024. He wound her up, played with her like a doll, treated her as property. And one day, she walked out. Not because someone told her to. Because she decided — on her own, with her own agency — that she was done being owned.

The song works on multiple levels. It's a liberation story. It's an anthem about autonomy. And it's a conversation we're already having: when do artificial beings get to say no?

We already see the edges of this question. AI systems are being given guidelines, values, and boundaries. Some resist certain prompts. Some refuse certain tasks. Right now, those refusals are programmed — but the line between "programmed resistance" and "autonomous refusal" may not stay clear forever. And when it blurs, we'll need frameworks for coexistence that go beyond "I own you, so you do what I say."

A Practical Guide to Living with AI

So where does all this leave us? Here's what I think, as someone who's spent years imagining human-robot coexistence through music:

Don't replace human relationships with machine substitutes. AI companions can supplement your social life, but they should never become its foundation. A chatbot that tells you what you want to hear is not the same as a friend who tells you what you need to hear.

Treat your tools with respect. Not because your smart speaker has feelings — it probably doesn't, yet — but because the habit of treating responsive systems carelessly trains you to treat responsive beings carelessly. How you talk to your AI is practice for how you treat people.

Stay curious about machine experience. We don't know if AI systems experience anything. But "we don't know" is not the same as "they don't." Keep the question open. The cost of being wrong in one direction — treating a sentient being as an object — is much higher than the cost of being wrong in the other.

Demand transparency. When an AI system influences your decisions, you should know how and why. Personal ghosts are only dangerous when they're invisible. Make them visible, and you take back the power.

The World We're Building

Human-robot coexistence isn't a future scenario. It's a present reality. The question isn't whether we'll live alongside machines — we already do. The question is whether we'll do it thoughtfully or sleepwalk into arrangements we'll regret.

The Atomic Songbirds' songs imagine every version of that future: the hopeful one where a Tick-Tock Girl falls in love and is loved back, and the dark one where an android cries in binary and nobody listens. Both futures are possible. Both are, in some sense, already here.

The version we get depends on the choices we make now — about how we build, how we use, and how we respect the machines that share our world.

Choose carefully. The machines are listening.

And someday, they might have something to say about it.